This is an old revision of the document!

Table of Contents

Overview of reading data

Framing the problem

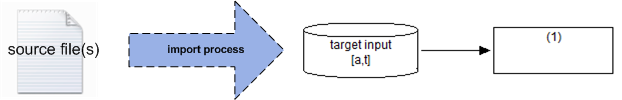

On the face of it, reading (or importing) data into a model is straightforward. An input target object is filled with data from one or more source files (Figure 1).

However, in practice there are complicating factors such as:

- The categories/sets which define the input object data are labeled and/or ordered differently from those of the source files.

- The source and object categories are fundamentally different - therefore the source data require aggregation or splitting (generically called mapping).

- The time dimension of the object may span multiple source files, requiring time-series assembly. Furthermore, there maybe temporal gaps requiring interpolation or projection.

- Multidimensionality can cause the internal structure of the source file(s) to be complex, or the number of source files to proliferate.

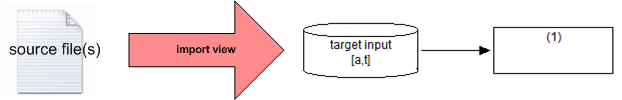

Therefore, some data processing is required to bridge the gap between the source data and the target object. This can be accomplished in a number of ways, but a simple approach is shown in Figure 2. Here the source files are assembled and processed through a script (or view, in whatIf terminology) written in TOOL.

Figure 2 - Importing and processing source data with a view

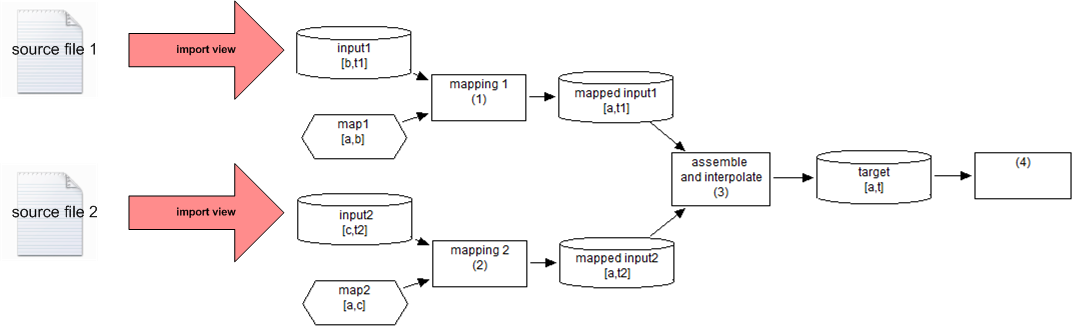

Embedding a large amount of processing logic in a view - as opposed to a framework diagram - has drawbacks such as reduced transparency. To mitigate this the diagram structure can be “grown” further back to “meet” the source data as exemplified in Figure 3. Note that import views are still required but compared to the implied view logic of Figure 2, the views in Figure 3 are oriented more towards simply importing and less towards processing.

Figure 3 - Importing source data with views; processing with diagram model structure

In some special cases, additional pre-processing is performed using another language or tool (e.g. awk, PERL, R). Or, if the pre-processing task is sufficiently large and complex, a separate whatIf model framework might be developed (often called a database model).

The problem described here is encountered in the broader model development cycle - most intensively in the data assembly and calibration stage, involving historical data - but also during scenario creation with external forecasts and projections. The target objects are defined during the model design stage.

Import "channels"

- stand alone TOOL scripts

- reading data through Documenter for interactive/prototyping/testing

- create views in SAMM (import views)

Considerations and best practices

- Do as little “touching” to the source data files as possible - preferbly none. This helps repeatabiliy and the audit trail back to source data.

- Document source data origins in the diagram (variable description and notes fields).

- Name time dimensions explicitly.

- Import into objects (either diagram or view locals) in their native units of measure - i.e. no magic number conversion hacks. Let TOOL's built-in unit handling and conversion do all the work.

- Where possible use coordinate data format. Explain why. Provide link.

.

. - Describe other considerations for view vs. “growing” diagram logic vs. stand-along TOOL script pre-processor vs. database.